27 Aug Ever wondered what your pet is thinking?

Wouldn’t it be great to know what your pet is thinking? Pei-Yun Sun, Professor Uwe Aickelin, Rio Susanto and Yunjie Jia look at a new App that may help! And, yes, dogs are easier to read than cats.

The new app uses artificial intelligence to analyse and interpret the facial expressions of your pet.

What is going on behind those whiskers? Does your dog’s wagging tail mean they are happy? How can we understand or interpret the emotions of our pets?

Well, perhaps technology like Artificial Intelligence (AI) can help.

The idea of using AI to understand our pets was kicked around during Splendour in the Grass 2019, an annual music festival in Byron Bay in the science tent, where all things computing were discussed and, in particular, using AI for ‘good’.

The festival ended with a challenge. Could we create a pet emotion assessor by the time Splendour 2020 took place? While Splendour has been postponed due to COVID-19, the Happy Pets app we’ve developed as a result of that challenge is now ready.

The Importance of Facial Expressions

What might have sounded far-fetched at first, actually raised some serious scientific questions.

Facial recognition for humans has become a mainstream and sometimes controversial technology – we use it to unlock our mobile phones and airports are using it increasingly as part of their security.

Human faces tell us a lot about what someone is feeling or thinking – so, could the same facial recognition technology be used to interpret the emotions of animals?

Before we talk about the technology, let’s look at what we know about facial expressions. Psychologists and anthropologists tell us that they are one of the most important aspects of human communication.

The face is responsible for communicating not only thoughts or ideas but also emotions.

What researchers have discovered, and which is key to the success of our app, is that some emotions (like happiness, fear or surprise) seem to have biologically ‘hard-wired’ human expressions that hold through across ethnicities and societies.

So our expressions aren’t a result of cultural learning but related to Darwinian evolution.

But what about animals? And can machines – or apps – check this?

Differentiating Patterns

From a technical perspective, there are two key steps.

First is classifying the specific types of animals we’re talking about – in this case, pets. Secondly, we then needed to identify the key features and patterns that represent the underlying emotions. And AI can help us achieve this.

The current state-of-the-art technology for image recognition in general are Convolutional Neural Networks. These are Deep Learning algorithms which can take in images and assign importance to them.

This kind of technology is widely used, from vision in robotics to self-driving cars.

Neural Networks are based on the way our brains work, using a mechanism called supervised learning. Through supervised learning, we learn what output (or label) should go with what input (an image).

In the same way you would teach a child to differentiate between an apple and a pear, for the algorithm, we adjust the weights and parameters of functions that transform inputs into outputs.

We do this until we have optimal results on training data – that is, if given a picture of an apple, the algorithm gives a high score that it thinks it is looking at an apple.

Creating feature maps

Convolutional Neural Networks are optimised for image recognition.

They work like normal neural networks but, in addition, they have the ability to extract and identify features from images, though a technique known as convolution.

The issue with facial features – like an arched eyebrow or a smirking mouth – isn’t so much what they look like, but the fact that they can appear ‘anywhere’ on an image. This is because photos can be taken at infinite angles, in different lighting and zooms.

A filtering mechanism is used to correct for this and transform images into feature maps.

This can then be repeated to create feature maps of feature maps until huge volumes of data, involving millions of pixels, are reduced to succinct features. Labels for these images (or features) can then be learnt by the AI.

For our mobile app, we used images extracted from online resources for the AI to learn what breed a pet is – it is a poodle or labrador?

But we also wanted it to interpret what emotion the animal was expressing at the time.

Key to getting our app to work were well-curated and labelled training data that covers many, many examples, with lots and lots of parameter tweaking to optimise the performance of the neural nets.

The result is Happy Pets which you can now download for free on Apple iOS or Google Android and try on your pet.

Your Dog Smiles!

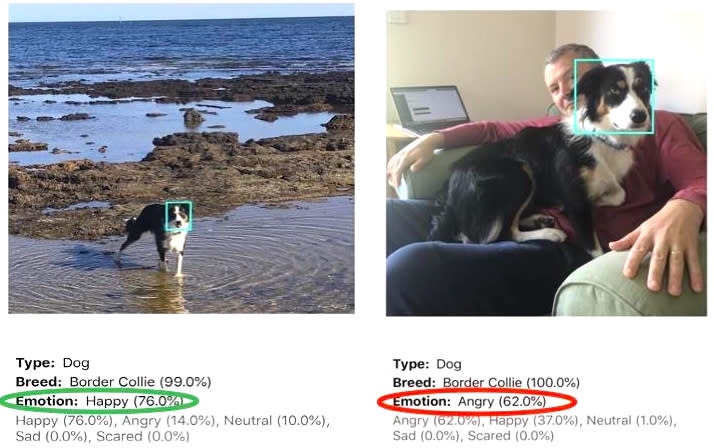

Our app classifies any unseen image – indicating a percentage for each of the five most common animal emotions (happy, angry, neutral, sad and scared) and the most likely breed.

The AI detects emotions based on specific facial features that are associated with each emotion, which it has learnt from thousands of examples. For instance, if a dog tightens its eyes and mouth while changing the position of its ears in a characteristic way, it’s a sign of being scared.

How accurate are the results? Well, we think they are pretty solid, having extensively tested the app, but you should judge for yourself.

At the moment, we only have a limited number of breeds available, so if yours isn’t there, it might get approximated to the nearest one.

Interestingly, we have found that cats are harder to read than dogs. In the future, we have talked about analysing a pet’s body in addition to its face in order to improve accuracy.

Overall, a pet’s emotion can be difficult to discern, but we are pretty good at identifying human emotions. So, whether we can link human emotion to a pet’s emotion – whether they have common features – could be an exciting area of study in the future.

The Happy Pets app was developed in the School of Computing and Information Systems at the University of Melbourne by the Melbourne eResearch Group.

The team would love feedback about potential extensions of the app- Apple iOS or Google Android and try on your pet.

Professor Uwe Aickelin Head, School of Computing and Information Systems, Melbourne School of Engineering, University of Melbourne

Yunjie Jia Software Developer, Computing and Information Systems and Pei-Yun Sun Software Developer, Computing and Information Systems, University of Melbourne

This article was first published on Pursuit. Read the original article.